AI Cloud

- Cloud Native Product Development

- Cloud Native FaaS

- Monolith to Microservices

- DevSecOps as a Service

- Kubernetes Zero Downtime

Understanding Kubernetes Cost Challenges

Why Kubernetes Cost Optimization Is Hard

Strategy 1: Right-Size Pods and Nodes

Strategy 2: Implement Efficient Autoscaling

Strategy 3: Eliminate Idle and Wasted Resources

Strategy 4: Optimize Node Pools and Instance Types

Strategy 5: Improve Workload Scheduling and Resource Isolation

Strategy 6: Gain Visibility with Cost Monitoring and FinOps Practices

Common Kubernetes Cost Optimization Mistakes

Final Thoughts

FAQs: Kubernetes Cost Optimization

Kubernetes itself is free and open source, but running Kubernetes is not free. The real costs come from the underlying infrastructure—compute, storage, networking, and managed services provided by cloud vendors like AWS, Azure, and Google Cloud.

The most common Kubernetes cost drivers include:

Over-provisioned CPU and memory

Underutilized nodes running 24/7

Inefficient autoscaling configurations

Idle development and staging environments

Persistent volumes that are never cleaned up

Lack of cost visibility at the namespace or workload level

Without active cost governance, Kubernetes environments tend to grow organically—and inefficiently.

Kubernetes abstracts infrastructure complexity, which is great for developers but challenging for cost control. Several factors make cost optimization difficult:

Pods, nodes, and services constantly scale up and down, making traditional cost tracking ineffective.

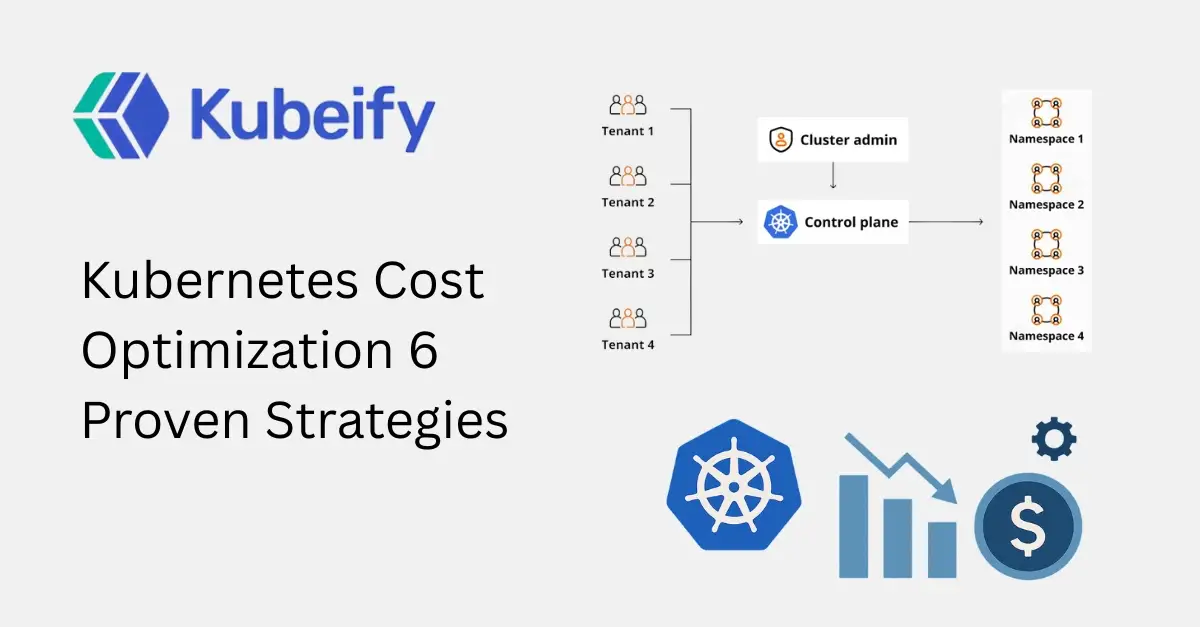

Multiple teams and applications share the same cluster, making it hard to attribute costs accurately.

Teams often request more resources than needed “just in case,” leading to low utilization.

When no team owns Kubernetes costs, optimization becomes nobody’s responsibility.

This is why intentional Kubernetes cost optimization strategies are essential.

One of the biggest contributors to Kubernetes cloud costs is over-allocated resources. Many pods request far more CPU and memory than they actually use, forcing Kubernetes to schedule larger and more expensive nodes.

Requests set equal to limits without data

Copy-paste resource values across services

Legacy configurations never revisited

Use metrics from tools like Prometheus, Metrics Server, or cloud monitoring to understand real CPU and memory consumption.

Set requests closer to average usage and limits based on realistic peak needs, not worst-case assumptions.

VPA automatically recommends or applies resource adjustments based on historical usage.

Higher node utilization

Fewer required nodes

Lower compute costs

Reduced scheduling failures

Right-sizing alone can reduce Kubernetes costs by 20–40% in many environments.

Autoscaling is one of Kubernetes’ strongest features—but only when configured correctly.

HPA scales pods based on CPU, memory, or custom metrics. However, poor configurations can cause:

Over-scaling during short traffic spikes

Delayed scale-down, wasting resources

Inefficient metric thresholds

Use custom metrics (request rate, queue depth)

Tune scale-down stabilization windows

Avoid aggressive scaling policies

Cluster Autoscaler adjusts the number of nodes based on pending pods.

Use node auto-provisioning wisely

Enable scale-down of empty nodes

Avoid mixing incompatible workloads on the same node pool

Autoscaling done right ensures you pay only for what you need, when you need it.

Idle resources are silent cost killers in Kubernetes.

Dev and staging environments running 24/7

Orphaned persistent volumes

Unused load balancers and IPs

Completed jobs and unused namespaces

Shut down dev and test clusters outside working hours.

Automatically clean up completed jobs and temporary resources.

Run periodic audits to detect unused services, volumes, and namespaces.

Organizations often discover 10–30% of their Kubernetes spend is tied to idle resources.

Not all workloads need the same type of compute.

Create multiple node pools for:

Stateless services

Stateful workloads

Batch jobs

GPU or memory-intensive applications

Ideal for fault-tolerant workloads like batch processing and CI jobs.

Often cheaper and more power-efficient for compatible workloads.

Large instances with low utilization waste money. Smaller, right-sized nodes often lead to better bin-packing and lower costs.

Efficient scheduling ensures Kubernetes uses resources optimally.

Prevent expensive nodes from running low-priority workloads

Ensure critical services get the resources they need

Allow high-priority workloads to preempt less critical ones

Reduce the need for over-provisioning

Set CPU and memory limits per team or environment to prevent uncontrolled growth.

You can’t optimize what you can’t see.

Cloud bills don’t map cleanly to Kubernetes workloads

Teams lack cost accountability

Engineering and finance teams operate in silos

Use tools that provide:

Namespace-level cost breakdowns

Pod and workload cost attribution

Trend analysis and anomaly detection

FinOps brings engineering, finance, and operations together to:

Define cost ownership

Set budgets and alerts

Continuously optimize spend

Cost optimization is not a one-time project—it’s an ongoing practice.

Focusing only on infrastructure, not workloads

Ignoring non-production environments

Overusing limits “just to be safe”

Treating cost optimization as a finance problem only

Making aggressive cuts that hurt performance

The goal is efficient Kubernetes, not cheap Kubernetes.

Kubernetes cost optimization is about balance—between performance, reliability, and efficiency. By applying the six proven strategies covered in this guide, teams can significantly reduce cloud spend while maintaining high-quality service delivery.

The most successful organizations treat cost optimization as a continuous engineering discipline, supported by data, automation, and shared ownership.

If you’re running Kubernetes at scale, optimizing costs is no longer optional—it’s a competitive advantage.

Kubernetes cost optimization is the practice of reducing cloud infrastructure expenses by efficiently managing Kubernetes resources without impacting performance.

Costs increase due to over-provisioned resources, idle workloads, inefficient autoscaling, and lack of visibility.

Most organizations can save 20–50% with proper optimization strategies.

Right-sizing means adjusting CPU and memory requests and limits to match actual workload usage.

Only when configured correctly. Poor autoscaling can increase costs.

Spot instances offer significantly lower prices for fault-tolerant workloads.

FinOps is a cultural and operational approach that aligns engineering, finance, and operations to manage cloud costs effectively.

No. Scheduling non-production environments can significantly reduce costs.

Use namespace-level cost allocation and monitoring tools.

No. It requires continuous monitoring, optimization, and collaboration.

Kubeify's team decrease the time it takes to adopt open source technology while enabling consistent application environments across deployments... letting our developers focus on application code while improving speed and quality of our releases.

– Yaron Oren, Founder Maverick.ai (acquired by OutboundWorks)

Let us know what you are working on?

We would help you to build a

fault tolerant, secure and scalable system over kubernetes.