AI Cloud

- Cloud Native Product Development

- Cloud Native FaaS

- Monolith to Microservices

- DevSecOps as a Service

- Kubernetes Zero Downtime

Kubernetes simplifies application deployment and scaling—but without properly setting resource requests and limits, you risk overloading nodes, wasting cloud budget, or causing unpredictable pod evictions.

Introduction

What Are Resource Requests and Limits in Kubernetes?

Why Are Resource Requests and Limits Important?

The Risks of Not Setting Requests and Limits

How Kubernetes Uses Requests and Limits

Best Practices for Setting Resource Requests

Best Practices for Setting Resource Limits

Right-Sizing Strategies: CPU vs Memory

Tools for Monitoring and Optimization

Real-World Example: Preventing Node Overload

CI/CD Considerations for Resource Management

Mistakes to Avoid

Conclusion

FAQs

Kubernetes simplifies application deployment and scaling—but without properly setting resource requests and limits, you risk overloading nodes, wasting cloud budget, or causing unpredictable pod evictions.

This guide dives deep into the best practices for configuring resource requests and limits in Kubernetes to ensure high performance, stability, and cost efficiency—especially in production environments.

In Kubernetes, each container can be assigned two main resource parameters:

Resource Requests: The minimum amount of CPU/memory Kubernetes guarantees a container will get.

Resource Limits: The maximum amount a container can use before being throttled or terminated.

These are typically defined in the container spec:

`` resources:

requests:

memory: “512Mi”

cpu: “500m”

limits:

memory: “1Gi”

cpu: “1000m”

``

CPU is measured in millicores (500m = 0.5 core), and memory in bytes (e.g., Mi, Gi).

Setting accurate requests and limits helps you:

✅ Avoid overprovisioning (wasting resources)

✅ Prevent under provisioning (causing instability or OOM errors)

✅ Ensure fair scheduling across pods

✅ Enable autoscaling to function properly

✅ Maintain cluster health and predictability

✅ Optimize cost, especially in managed services like GKE, EKS, or AKS

Failing to define proper values leads to:

❌ Pod eviction under memory pressure

❌ Unfair scheduling by kube-scheduler

❌ Throttling of CPU-bound apps

❌ Unpredictable performance

❌ Cluster-wide resource imbalance

❌ Higher cloud bills due to oversized nodes

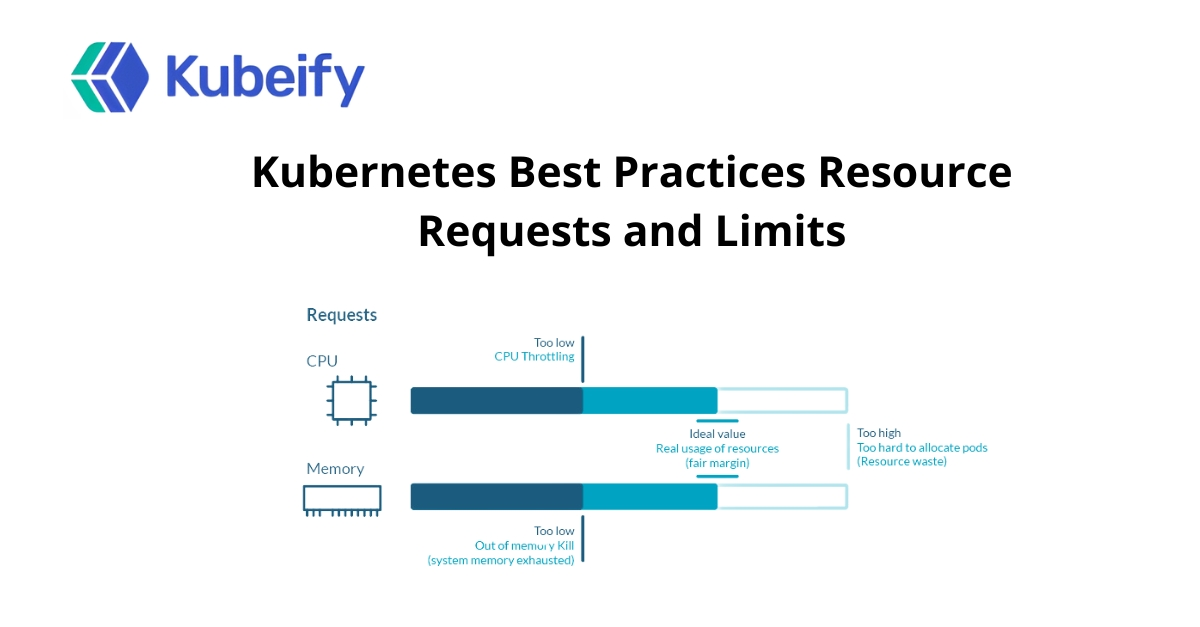

Scheduler Behavior: The scheduler places pods based on requests, not limits.

Kubelet Behavior: Enforces limits at runtime using cgroups.

OOM Killer: If a pod exceeds its memory limit, it will be terminated with an “OOMKilled” status.

CPU Throttling: CPU limits aren’t fatal but can reduce performance as containers get throttled.

Gather metrics from Prometheus, Metrics Server, or Datadog to observe real usage patterns.

Don’t guess—analyze CPU & memory usage during load testing or production hours.

Set requests around the 90th percentile of average usage to balance stability and cost.

Generic values like 100m for all pods is bad practice. Customize per application.

Example: If your request is 500Mi, set the limit to 800Mi–1Gi to allow for spikes without risking OOM kills.

Without limits, a single rogue pod can starve others.

CPU throttling can cause latency and jitter—test your services under limit constraints.

Memory usage is sticky—once allocated, it’s rarely released.

Right-size by measuring working set size, not peak.

CPU is burstable and shared.

Set CPU requests based on steady-state needs; allow limits to absorb burst load.

Tool

Purpose

Goldilocks

Recommends optimal CPU/memory settings

Prometheus + Grafana

Visualize container resource usage

Kube Metrics Server

Lightweight metrics collection

Kubecost

Cost analysis based on resource usage

Vertical Pod Autoscaler (VPA)

Suggests/request/limit values automatically

A dev team deployed a microservice-heavy workload without proper limits. Over time, one pod consumed 90% of node memory due to a memory leak, leading to eviction of critical services.

Fix:

Set requests.memory: 300Mi

Set limits.memory: 600Mi

Monitored usage via Grafana dashboards

Result: No more OOM kills, and cost savings through right-sized nodes.

Use static analysis or OPA Gatekeeper to enforce resource fields in manifests.

Validate YAML in pull requests to check for missing or oversized values.

Introduce canary deployments to validate performance under set limits.

🚫 Setting requests too low → Unstable performance

🚫 Setting limits too tight → Frequent throttling/OOM

🚫 Skipping requests → Scheduler can’t place pod properly

🚫 Uniform values for all pods → Wasted resources

🚫 Ignoring autoscaling → Missed optimization potential

Properly configuring resource requests and limits is one of the most powerful, yet often overlooked, practices in Kubernetes optimization. It affects not only the reliability of your services but also your bottom line—especially in cloud environments.

Start small. Measure. Adjust. Automate. Over time, you’ll gain a more efficient, stable, and scalable Kubernetes infrastructure.

Kubernetes may schedule pods inefficiently, and they can be evicted or throttled during resource contention.

Yes, but cautiously. CPU limits can throttle apps. For latency-sensitive apps, test performance under constrained conditions.

Highly recommended. Without it, a memory leak can crash the node.

No. HPA (Horizontal Pod Autoscaler) relies on resource requests to calculate thresholds.

Use metrics from tools like Prometheus or Goldilocks based on actual usage.

Requests are guaranteed minimums; limits are enforced maximums.

No. CPU is throttled, not terminated. Memory overuse results in termination.

Not always. It’s better to give room for spikes with higher limits.

Yes. Tools like Goldilocks, VPA, and Kubecost help automate right-sizing.

Regularly—especially after updates, usage changes, or performance issues.

Kubeify's team decrease the time it takes to adopt open source technology while enabling consistent application environments across deployments... letting our developers focus on application code while improving speed and quality of our releases.

– Yaron Oren, Founder Maverick.ai (acquired by OutboundWorks)

Let us know what you are working on?

We would help you to build a

fault tolerant, secure and scalable system over kubernetes.